Dynamic Neural Accelerator®

Supported Frameworks & Applications

Dynamic Neural Accelerator®-II Architecture

EdgeCortix Dynamic Neural Accelerator II (DNA-II) is a highly-efficient and powerful neural network IP core that can be paired with any host processor. Achieving exceptional parallelism and efficiency through run-time reconfigurable interconnects between compute elements, DNA-II has support for both convolutional and transformer networks, and is ideal for a wide variety of edge AI applications. DNA-II provides scalable performance starting with 1K MACs supporting a wide range of target applications and SoC implementations.

The MERA software stack works in conjunction with DNA to optimize computation order and resource allocation in scheduling tasks for neural networks.

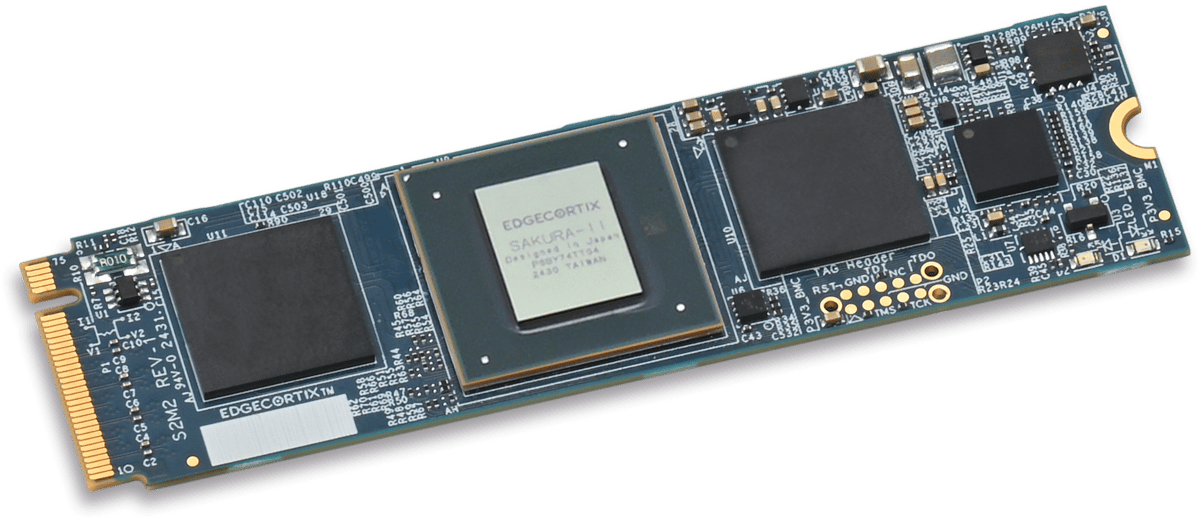

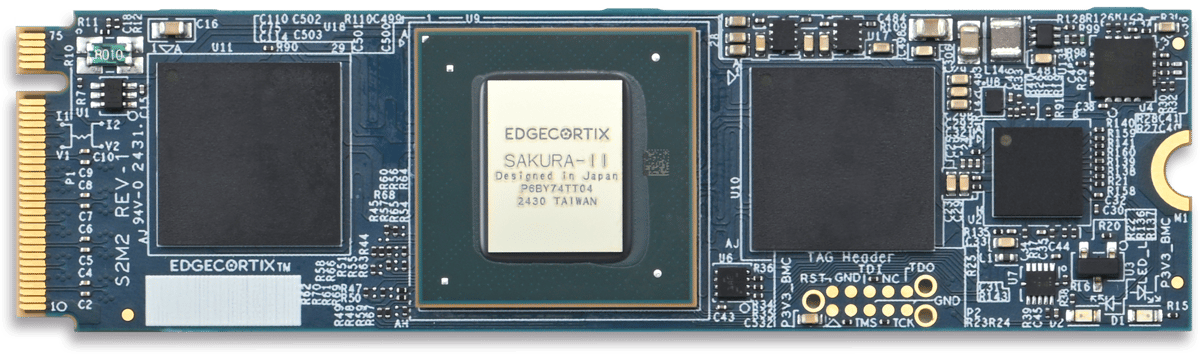

DNA is the driving force behind the SAKURA-II AI Accelerator, providing best-in-class processing at the edge in a small form factor package, and supporting the latest models for Generative AI applications.

EdgeCortix’s SAKURA-II AI Accelerator Brings Low-Power Generative AI to Raspberry Pi 5 and other Arm-Based Platforms

DNA-II Efficiency

Tera Operations Per Second (TOPS) ratings are typically quoted using optimal conditions, with compute units fully parallelized. When AI models are mapped to other vendors hardware, the parallelism drops - and achievable TOPS falls to a fraction of their claimed peak capability.

EdgeCortix reconfigures data paths between DNA engines to achieve better parallelism and reduce on-chip memory bandwidth, using a patented runtime reconfigurable datapath architecture.

IP Core Inefficiencies

- Slower processing due to batching

- Higher power consumption due to higher re-use of resources

- Low compute utilization resulting in lower efficiency

DNA Datapath Advantages

- Much higher utilization and efficiency

- Significantly faster processing due to task and model parallelism

- Very low power consumption for edge AI use cases

EdgeCortix reconfigures data paths between DNA engines to achieve better parallelism and reduce on-chip memory bandwidth, using a patented runtime reconfigurable datapath architecture.

Get the details in the DNA-II product brief

EdgeCortix Edge AI Platform

Unique Software

Efficient Hardware

Deployable Systems

SAKURA-II M.2 Modules and PCIe Cards

EdgeCortix SAKURA-II can be easily integrated into a host system for software development and AI model inference tasks.

Order an M.2 Module or a PCIe Card and get started today!

Given the tectonic shift in information processing at the edge, companies are now seeking near cloud level performance where data curation and AI driven decision making can happen together. Due to this shift, the market opportunity for the EdgeCortix solutions set is massive, driven by the practical business need across multiple sectors which require both low power and cost-efficient intelligent solutions. Given the exponential global growth in both data and devices, I am eager to support EdgeCortix in their endeavor to transform the edge AI market with an industry-leading IP portfolio that can deliver performance with orders of magnitude better energy efficiency and a lower total cost of ownership than existing solutions."

Improving the performance and the energy efficiency of our network infrastructure is a major challenge for the future. Our expectation of EdgeCortix is to be a partner who can provide both the IP and expertise that is needed to tackle these challenges simultaneously."

With the unprecedented growth of AI/Machine learning workloads across industries, the solution we're delivering with leading IP provider EdgeCortix complements BittWare's Intel Agilex FPGA-based product portfolio. Our customers have been searching for this level of AI inferencing solution to increase performance while lowering risk and cost across a multitude of business needs both today and in the future."

EdgeCortix is in a truly unique market position. Beyond simply taking advantage of the massive need and growth opportunity in leveraging AI across many business key sectors, it’s the business strategy with respect to how they develop their solutions for their go-to-market that will be the great differentiator. In my experience, most technology companies focus very myopically, on delivering great code or perhaps semiconductor design. EdgeCortix’s secret sauce is in how they’ve co-developed their IP, applying equal importance to both the software IP and the chip design, creating a symbiotic software-centric hardware ecosystem, this sets EdgeCortix apart in the marketplace.”

We recognized immediately the value of adding the MERA compiler and associated tool set to the RZ/V MPU series, as we expect many of our customers to implement application software including AI technology. As we drive innovation to meet our customer's needs, we are collaborating with EdgeCortix to rapidly provide our customers with robust, high-performance and flexible AI-inference solutions. The EdgeCortix team has been terrific, and we are excited by the future opportunities and possibilities for this ongoing relationship."