Energy-Efficient Semiconductors for AI, at the Edge, in the Age of Large Language Models

CEO Sakya Dasgupta discusses challenges in the Edge AI market and highlights how our new SAKURA-II Platform addresses these concerns.

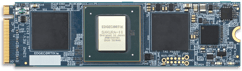

Engineered to tackle challenging Generative AI tasks and designed for flexibility and power efficiency, SAKURA®-II empowers users to seamlessly manage a wide range of complex tasks at the edge. Featuring low latency, best-in-class memory bandwidth, high accuracy, and compact form factors, SAKURA-II delivers unparalleled performance and cost-efficiency across the diverse spectrum of edge AI applications. SAKURA-II can deliver up to 60 trillion operations per second (TOPS) of effective 8-bit integer performance and 30 trillion 16-bit brain floating-point operations per second (TFLOPS), while also supporting built-in mixed precision for handling the rigorous demands of next-generation AI tasks. Interested in joining the next wave of efficient AI inferencing at the edge?

Next Wave of Generative AI at the Edge

Introducing SAKURA-II, the world’s most flexible and energy efficient AI accelerator

AI Accelerator

Efficient Hardware

Modules and Cards

powered by the latest SAKURA-II

AI Accelerators

Deployable Systems

Unique Software

Proprietary Architecture