AI inference for Automotive Sensing

Faster, higher resolution sensors in different modalities

More processing with less power consumption ahead

The automotive industry has been working on advanced driver assistance systems, or ADAS, where AI inference plays a big role. Next, self-driving vehicles will usher in faster, higher resolution sensors, likely in three modalities, requiring more AI operations. At the same time as more sensor processing is needed, sensor and processing power consumption must drop if vehicles are to achieve range goals.

Lidar operates on a laser beam rapidly scanned in 3D space. Its output is a 3D point cloud indicating distances to objects the beam touches. Point clouds can be highly detailed but difficult to interpret, especially as a vehicle and surrounding objects move.

Lidar operates on a laser beam rapidly scanned in 3D space. Its output is a 3D point cloud indicating distances to objects the beam touches. Point clouds can be highly detailed but difficult to interpret, especially as a vehicle and surrounding objects move.

Advanced modeling can find scan-to-scan differences, such as determining when another vehicle has pulled alongside, or is in a blind spot, or is straying from its lane. Lidar has one other advantage - it can sense height, including clearances to bridges and parking structures, essential for high-profile vehicles.

Much of the research into AI inference is focused on analyzing these point clouds, since many types of information can be harvested from them. AI inference techniques such as open-set semantic segmentation may help with unstructured lidar data.

Cameras are good at replicating many of the features of human vision. Increasing frame rates and pixel resolution produces higher quality images. But, these increases introduce a challenge: more frames and pixels mean more processing.

Cameras are good at replicating many of the features of human vision. Increasing frame rates and pixel resolution produces higher quality images. But, these increases introduce a challenge: more frames and pixels mean more processing.

Increased detail means an object in the distance may be detected more quickly. This may buy critical time, especially for a heavy truck at highway speeds which requires more distance to maneuver and stop. Accurate detection may mean every pixel in a scene needs semantic segmentation with a label.

AI can give cameras more capability such as real-time depth estimation, crucial for camera-only systems in self-driving vehicles to perceive correctly. Attention-based AI models may also improve detection of objects in camera images.

Making vehicles smarter with AI inference is only part of the change needed to help roads be safer and more efficient. Another initiative is intelligent transportation, adding features to roads for gathering real-time data and assisting drivers.

Making vehicles smarter with AI inference is only part of the change needed to help roads be safer and more efficient. Another initiative is intelligent transportation, adding features to roads for gathering real-time data and assisting drivers.

Vehicle counting has existed for years, but now much more information can be extracted from cameras and other sensors. Vehicle types can be identified, as well as pedestrians and bicycles. Vehicle spacing can be monitored and lane changes can be observed. Emergency vehicles can be notified and guided to incidents.

Vehicle-to-everything (V2X) systems also come into play. V2X can help enforce vehicle speeds and spacing. Intelligent transportation may also eventually coordinate traffic on roads with other modalities such as light rail and air taxis.

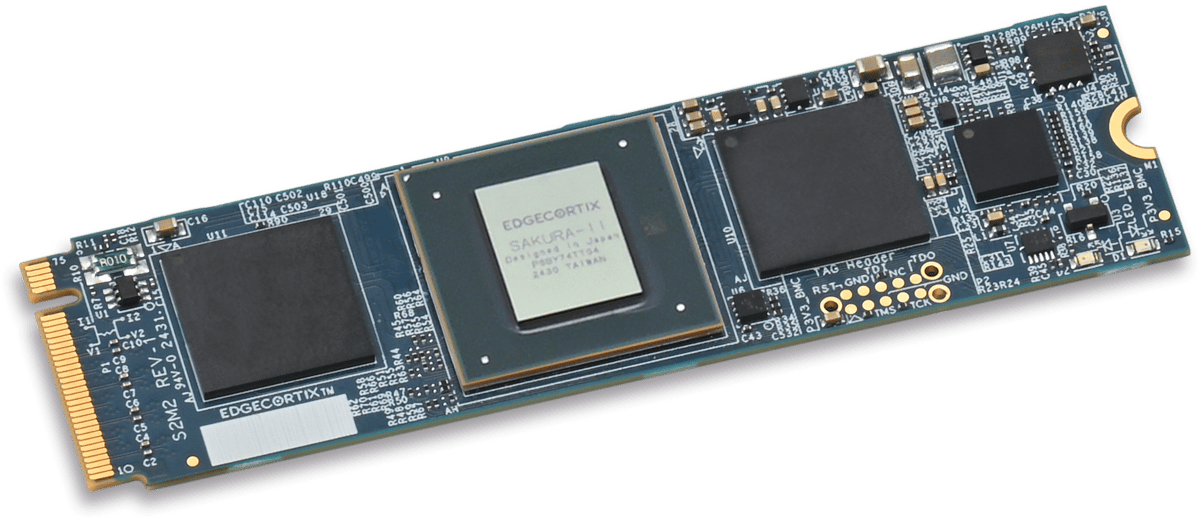

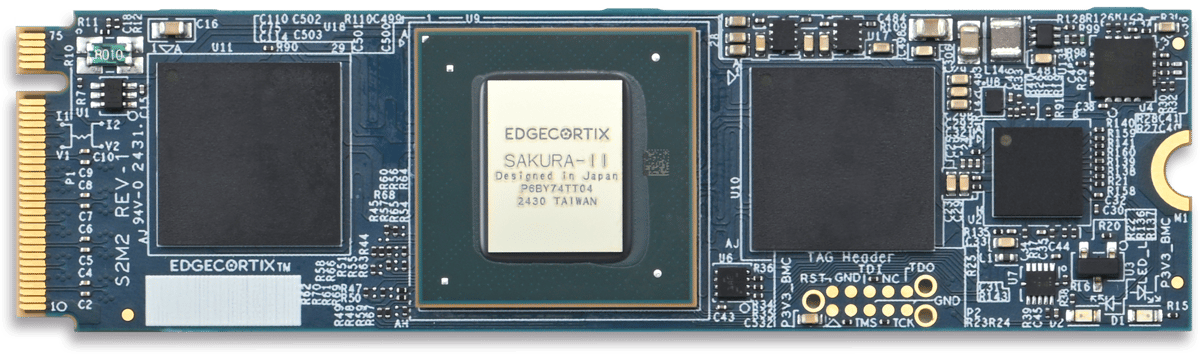

SAKURA-II M.2 Modules and PCIe Cards

EdgeCortix SAKURA-II can be easily integrated into a host system for software development and AI model inference tasks.

Order an M.2 Module or a PCIe Card and get started today!

EdgeCortix Edge AI Platform

Full-Stack Solution: Integrated Platform Increases Ecosystem Value Over Time

Unique Software

Proprietary Architecture

AI Accelerator

Efficient Hardware

Modules and Cards

powered by the latest SAKURA-II

AI Accelerators

Deployable Systems

Given the tectonic shift in information processing at the edge, companies are now seeking near cloud level performance where data curation and AI driven decision making can happen together. Due to this shift, the market opportunity for the EdgeCortix solutions set is massive, driven by the practical business need across multiple sectors which require both low power and cost-efficient intelligent solutions. Given the exponential global growth in both data and devices, I am eager to support EdgeCortix in their endeavor to transform the edge AI market with an industry-leading IP portfolio that can deliver performance with orders of magnitude better energy efficiency and a lower total cost of ownership than existing solutions."

Improving the performance and the energy efficiency of our network infrastructure is a major challenge for the future. Our expectation of EdgeCortix is to be a partner who can provide both the IP and expertise that is needed to tackle these challenges simultaneously."

With the unprecedented growth of AI/Machine learning workloads across industries, the solution we're delivering with leading IP provider EdgeCortix complements BittWare's Intel Agilex FPGA-based product portfolio. Our customers have been searching for this level of AI inferencing solution to increase performance while lowering risk and cost across a multitude of business needs both today and in the future."

EdgeCortix is in a truly unique market position. Beyond simply taking advantage of the massive need and growth opportunity in leveraging AI across many business key sectors, it’s the business strategy with respect to how they develop their solutions for their go-to-market that will be the great differentiator. In my experience, most technology companies focus very myopically, on delivering great code or perhaps semiconductor design. EdgeCortix’s secret sauce is in how they’ve co-developed their IP, applying equal importance to both the software IP and the chip design, creating a symbiotic software-centric hardware ecosystem, this sets EdgeCortix apart in the marketplace.”